Powering Data Centers

One challenge in building data centers is finding the energy to power them!

Inspired by

, specifically the post on solar powered data centers, I've put together a video, and a simple tool, to compare combinations of wind, solar and gas - in any part of the world.This is a little different from my usual training and inference content, but relevant to LLMs nonetheless!

Thoughts on OpenAI’s o1

There are two papers in particular I’ve found relevant, and there are more I’ve yet to review:

Let’s Verify Step by Step - OpenAI paper from 2023 with Sutskever on it, and

Scaling LLM Test-Time Compute Optimally can

be More Effective than Scaling Model Parameters - a very recent Google paper.

and I wrote out some of my thoughts on X, copied now here:

How does o1 have so many output tokens?

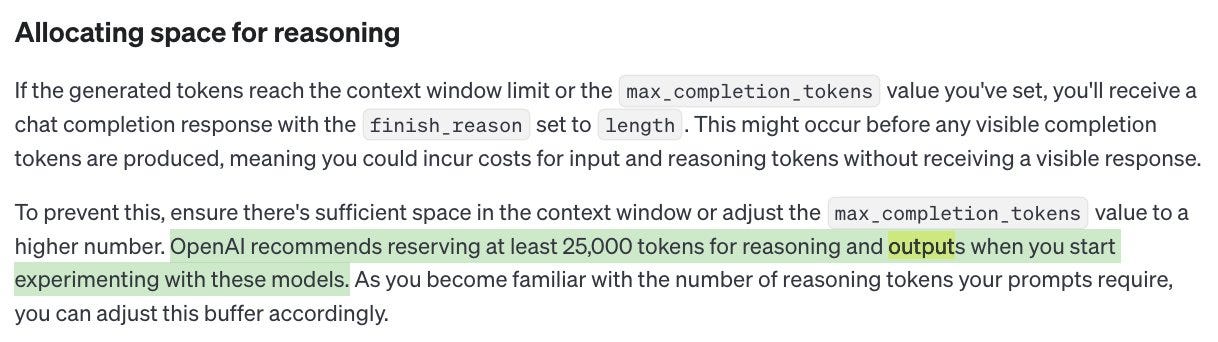

Perhaps the key reveal on o1 is in the API docs:

OpenAI recommends allowing for 25k tokens for reasoning and outputs.

Yes, when I ask o1 a question, I get many tokens in response, but not 25k—maybe I get 1000 to 2000 tokens.

So, that means there can be 20k+ tokens for thinking.

But o1 infers at about 20-30 tokens per second. So generating 20k tokens could take up to 15 minutes! That doesn't make a lot of sense.

Instead, my guess is that the model, during the thinking phase, uses parallel sampling, with a classifier to pick out the best answer.

Why sampling makes sense

Particularly for reasoning/logic questions (but actually for any question where there can be preferences), it is known that sampling multiple responses from an LLM increases the chances of finding the right one. This is part of what Jack Cole has done on the ARC Prize.

You can also see how sampling helps in the Monte Carlo video I did on YouTube.

Now, o1 doesn't a priori know whether a "correct" answer exists for the problem or not, so a classifier needs to be trained to pick out "correct" answers. That's covered in OpenAI's 2023 paper titled "Let's Verify Step by Step."

Basically, you would run (in parallel, which is good for inference utilization) say 32 different examples—maybe even off o1 mini for speed, as highly sampled small models can outperform larger models—and pick out the best of them.

Is this parallel approach good enough?

Probably not. Some reasoning problems may take 1000 samples or more to find the right answer.

But, there's a way to do better.

Instead of classifying full "thinking" answers, you classify at each step of thinking.

Perhaps you start by running a batch of 64 for the first paragraph of the response. You pick the best, then run another batch of 8 for each of the top eight answers there.

You can repeat this in whatever way you have ablated as optimal until you reach some budget of tokens. Ideally, the token budget is made dependent on the difficulty, perhaps via another classifier.

Once done, you take the best overall answer, as rated by the classifier, and feed that as the "thinking" information for generating user outputs.

This could definitely be wrong, and I have more papers to read. But it strikes me as odd that one might generate as much as 20k tokens in series, and that it could be better than parallelization. At some point, it just becomes optimal to parallelize versus serially improve on previous answers.

Humans too often drop or forget one approach and move to a different angle—in which case, you may not want all previous approaches in context. This also partly explains why it doesn't make sense competitively to reveal the thinking, at least yet. If there are hyper-parameters involved, competition may mean it becomes optimal to allow developers to customize those parameters for their use cases.

That’s it for this week, cheers, Ronan

More Resources at Trelis.com/About